Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

Are you a developer concerned that AI might threaten your job? Based on my experience with Cursor — an IDE fully integrated with AI — I’d say the general perception holds true: junior developer roles are the most at risk, at least for now.

Curious how it actually feels to use cutting-edge AI coding tools? In this article, I share what Cursor did well (and where it fell short), what tech stack I used, and how I built and deployed a functional full-stack app — using only free hosting and AI-driven development.

Cursor AI was recently evaluated at my workplace, Playtika, as a potential alternative to GitHub Copilot.

Although I haven’t been actively coding for the past three years — my role has shifted more toward engineering management — I was curious to see how the developer experience has evolved. I wanted to understand how tools like Cursor are reshaping the coding workflow, especially compared to what I was used to three years ago.

So I took Cursor for a spin, built a real-world app from scratch, and documented my experience — the good, the bad, and everything in between.

Before diving into the development experience, I wanted to get a broader sense of what tools are currently available. There’s no shortage of AI-assisted coding solutions — but their capabilities, focus areas, and maturity levels vary widely.

I’ve skipped pricing details in this comparison. Some tools are free, but in my experience, the best-performing ones typically aren’t. Most of them cost up to $20/month — including Cursor.

Below, I’ve listed the most relevant tools, roughly ordered by user adoption (higher first):

| Tool | AI Agent Mode | Customization | AI Models Integration | Editor Base | Target Audience |

| GitHub Copilot | ❌ No | Low | OpenAI, Claude, Gemini | VS Code, JetBrains | Devs using Github |

| Cursor AI | ✅ Yes | High | OpenAI, Claude, Gemini, Mistral, DeepSeek | VS Code fork | Devs seeking full AI IDE |

| Amazon Codewhisperer | ❌ No | Low | Amazon proprietary | VS Code/JetBrains | AWS devs |

| Windsurf | ✅ Yes (Cascade) | Medium | Custom (Cascade & Supercomplete) | VS Code | Advanced VS code users |

| Void | ✅ Yes | Very high | Custom/local/self-hosted LLMs | VS Code fork | Open-source enthusiasts & privacy-focused devs |

One of the most notable evolutions in AI-assisted development is the rise of Agent Mode — where AI becomes more than a smart autocomplete.

Instead of suggesting lines of code, an AI agent can:

This shifts the role of AI from helping you type faster to actually taking work off your plate — like delegating to another developer.

Whether that potential translates into real productivity gains? That’s what I set out to test.

Windsurf appeared to be the most complete alternative to Cursor, particularly due to its Cascade agent and scripting capabilities. However, its relatively low adoption and limited community support made me hesitant to invest time into testing it at this stage. I plan to revisit it once it gains more traction and maturity.

As for Void, its focus on open-source and self-hosted models is compelling — especially for privacy-conscious teams or those with custom infrastructure needs. That said, I currently expect the performance and reliability of those models to lag behind leading proprietary solutions, so I’ve chosen to hold off on evaluating it for now.

To better understand how Cursor is used in practice, I reviewed a variety of sources — LinkedIn posts, blog articles, YouTube videos, and discussed with developers I know to get their feedback.

While this was by no means an exhaustive study, I noticed a few recurring patterns:

🧪 Building PoC apps. Most public examples I found were relatively simple — focused on building small projects, often without deploying them to production or evolving them further. These seemed aimed more at learning or experimentation than long-term development.

In many cases, the content reminded me of my academic exercises: easy to follow in a controlled setting, but not easily transferable to more complex, real-world scenarios.

That said, I was surprised by the range of technologies people were enthusiastic to use it for — from machine learning to 3D modeling.

💼 Personal Branding and Selling. Some individuals appeared to be using Cursor primarily as part of personal branding or to promote their services, rather than showcasing concrete development workflows. Or to do predictions about its potential in replacing developer’s work.

⚙️ Copilot-like usage. When it comes to more experienced developers, their use of Cursor seemed more conservative — typically limited to well-scoped, small tasks within a single file, similar to how GitHub Copilot or even ChatGPT is often used.

That said, this is just what I was able to observe from public content. It’s entirely possible that more advanced or large-scale use cases exist, but I may have simply missed them.

Of all the resources I found, one of the most helpful was this concise guide with practical tips for using Cursor more effectively.

To make the most of this experiment, I chose a project I genuinely care about: a custom platform for booking tours, which I plan to use as the foundation for a future startup.

The goal was to stay motivated beyond just a demo — building something useful, not just a throwaway proof of concept.

I started with some brainstorming and drafted the high-level requirements for the MVP. I prioritized features based on relevance and feasibility, and documented everything in a plain text file.

To keep development fast and focused, I chose a monolithic architecture, planning to revisit scalability and maintainability later if the project gains traction.

My thought process on choosing the tech stack was to identify technologies that are:

I validated my thinking in a quick back-and-forth with ChatGPT, and ended up with the following stack:

| Layer | Tech |

| Architecture | Full-stack serverless monolith |

| Frontend | Next.js + React + Tailwind CSS |

| Backend | Next.js API Routes (serverless functions) |

| Database | Supabase (PostgreSQL) |

| ORM | Prisma |

| Hosting | Vercel (frontend +API) + Supabase DB |

| Versioning | GitHub |

To get started, I created a ReqAndPlan.md file containing:

I asked ChatGPT to help me structure this document clearly, then used it as context when setting up my project in Cursor AI.

After installing Cursor (which includes a free 2-week trial), I opened a new project folder, added the markdown file, and set it as the agent’s working context. Then I gave it a simple prompt:

“Set up the project with the specified tech stack.”

The result was impressive.

Cursor handled:

Where manual intervention was needed:

Still, all these were minor. Everything worked smoothly, and within less than an hour, I had a functional skeleton app up and running — visually and structurally similar to a static WordPress site.

Here’s the final output from the Cursor AI agent.

From this point on, I started implementing features one by one. My goal was to stay as high-level as possible — writing natural-language instructions to the AI agent, instead of coding directly.

I didn’t strictly follow the initial development plan. Like in any real-world project, priorities shifted along the way.

Rather than go through every single feature, I’ll focus on the most relevant takeaways: what worked, what didn’t, and where Cursor fits best (IMO) in a developer’s workflow.

By the way, once the free trial expired, I chose to continue with a paid subscription — a small spoiler that I found value in the tool.

You can follow the actual feature evolution in my GitHub repo (more up to date than the original ReqAndPlan.md file).

Once I had a basic version of the app working locally, I moved on to production deployment.

Cursor was helpful here — but not without issues. The deployment setup required a lot more hands-on guidance than the initial app scaffolding.

Despite these issues, I managed to get everything deployed — but it definitely required developer experience and attention to detail. This is not something I’d expect to be done correctly by someone inexperienced.

One area where Cursor really shined was in handling UI changes. When given clear, precise instructions, it was often fully autonomous — making correct changes and updating styles without any direct coding from my side.

Cursor responded well to most UI instructions — whether they were precise or just described the intended functionality.

It made coordinated changes across relevant components without needing detailed file references. For example, when asked:

“Change the site color theme from purple to green”

…it updated styles consistently across the app.

When I pointed out visual bugs (like layout shifts or styling inconsistencies), it usually fixed them without further help — much like addressing a QA ticket.

Cursor instruction and output:

UI result:

Cursor instruction and output:

UI result:

Cursor instruction and output:

UI result:

This was one of the areas where Cursor felt the most like a reliable junior front-end developer — fast, responsive to feedback, and often able to improve design choices on its own. It’s also where AI Agent Mode felt closest to delivering a real productivity boost: as long as the requirements were concrete, Cursor’s UI changes were fast and effective.

For me personally, this was also the area I was least familiar with, and where I would have otherwise spent the most time and effort if coding everything manually. Letting the AI handle the visual implementation while I focused on functionality made a clear difference.

For common, well-documented tasks like Google authentication or basic CRUD operations, Cursor performed surprisingly well — especially when given clearly written, functional requirements.

These types of tasks hit the sweet spot for AI-assisted development: predictable structure, clear goals, and limited cross-file dependencies.

For example, a request like:

“Create a form to add new tours, with fields for title, location, image, and description.”

…resulted in fully functional code that only needed minor QA on my end. The only issue was that it initially saved images to a local folder, which didn’t work in production — I had to follow-up for a proper fix.

Cursor instruction:

UI result: Instead of implementing an edit page, Cursor duplicated the entire Tours component with a slightly modified title. The logic was incorrect and the structure cluttered.

❗ What Went Wrong

The instruction bundled together multiple functional and UI changes — page routing, form setup, CRUD logic, and confirmation handling — without a clear separation of concerns.

It was simply too much to ask in a single step. In hindsight, I should have broken it down into smaller, focused tasks (e.g., first create the edit page layout, then wire up each input, then handle delete separately).

When I moved beyond basic CRUD and started implementing multi-step logic, Cursor began to show its limits.

My goal was to introduce a review and rating system for tour guides — something that spanned multiple entities, views, and API layers.

My first attempt was to define the entire feature in one instruction.

Cursor Instruction:

“Create a public profile page at /guides/[id] that displays information about a tour guide. The guide profile should be visible to all users, and include: profile picture, name, location, languages spoken, years of experience, specialties, and a detailed bio. Also include a list of reviews for this guide. Show their average rating and number of reviews.”

“Allow logged-in users to leave a review for the guide on this page. Each review should include a 1–5 star rating, a comment, and be linked to the user who wrote it. Store reviews in a guide_reviews table.”

Code Result:

After generating and replacing a large amount of code, Cursor ran into multiple linter errors and began looping through the same fixes repeatedly — about every 4–5 cycles, and not the “3 attempts” it claims in the UI. It even searched Prisma documentation mid-way but still failed to resolve the problem (it was not a Prisma issue).

Worse, the more it tried to fix things, the more it broke previously working code.

Eventually, it asked for my help.

Its root cause analysis was mostly off — it only correctly flagged the issue with the reviewer relation.

After some debugging, my first instinct was to rewrite the feature manually. In the end, I reverted all the changes, but decided to give AI another shot — just with a different, more structured strategy.

❗ What Went Wrong:

The instruction covered too much — UI routing, form logic, database schema, review rules, and access control — all in one pass. Cursor struggled to manage this scope and maintain context, and the result became hard to debug, review, or trust. It was a clear case of needing to break down complexity into smaller, guided steps.

After reverting the first attempt, I decided to approach the feature like I would with a junior developer: break the task into isolated, testable chunks, give feedback often, and commit progress incrementally.

Here’s how it went:

✅ Split the Tours page:

✅ Added needed fields to DB:

✅ Create the review submission API:

✅Build the client-side form:

✅ Create API to calculate average ratings:

✅ Create API to retrieve guide profile data:

✅ Add API to retrieve all reviews for a guide:

✅ Finally, build the UI to display a guide’s reviews:

Once the core features were working, I started exploring whether Cursor could help me improve code quality, performance, and security. While it did generate suggestions, the results were mixed — and at times, risky.

Cursor frequently rewrote larger parts of the codebase — even when I only asked for small changes. This made ongoing code review essential.

Overall, it produced mostly decent code, but:

What frustrated me most was its tendency to overwrite already validated, higher-quality code — even without being prompted to touch those areas. I gave up on reviewing and maintaining these changes for my startup project. But for a corporate or production system, that approach simply wouldn’t be acceptable.

While this behavior may improve in future versions, I still find it difficult to guide the AI on when to treat existing code as “gospel” and when it’s safe to modify it. Even among senior developers, best practices are often debated — so expecting an AI to consistently get it right is a tall order.

At best, it might align with the highest standard it finds in the codebase.

My instruction: “review the current implementation for performance”.

Below its output:

It applied improvements in the files that (I assume) were in its current context — but not consistently across the codebase. Nothing broke, which was a win.

My instruction: “review the current implementation for security concerns”.

Below its output:

Cursor didn’t apply any changes automatically — instead, it listed several recommendations and asked whether I wanted to apply them one by one.

When I approved the suggestion related to environment variable handling, things went downhill quickly. It crashed the app, then tried to “fix” the issue by altering unrelated parts of the codebase — including my database schema. At one point, it even attempted to wipe and regenerate parts of the DB configuration.

I had to fully revert the changes.

❗ What Went Wrong:

The suggestions themselves weren’t necessarily bad — they could serve as a good starting point. But applying them blindly (even with approval) caused cascading issues — and once it goes off-track, it tends to snowball.

For anything involving security, I’d recommend using it strictly as an advisory tool — useful only if you know what you’re doing and can apply them safely yourself.

Bug fixing with Cursor was a mixed experience. Sometimes it nailed issues on the first try — other times, it spiraled into unnecessary changes or overly aggressive refactoring.

Cursor performed well when I:

It even has a fully autonomous mode where it can run commands and inspect the output itself — though I kept that disabled to maintain more control. I found this helped avoid situations where it would change multiple files at once without clear visibility into why, making it difficult to follow and review at the end.

Example:

There were several cases where Cursor said the problem was fixed, but it wasn’t. Notably:

Cursor is most helpful when used in a QA-style debugging loop:

It’s still not reliable enough to fully drive the debugging or testing process on its own — especially for complex flows or codebases that have grown beyond a few tightly scoped files.

These were the areas where Cursor consistently added value and saved me time:

In short, Cursor worked best on well-scoped, conventional tasks, especially when the context was clear and the technology stack was popular.

While Cursor shined in well-scoped tasks, it struggled in areas that required stability, precision, or nuanced decision-making.

Overall, it’s helpful — but only if you stay in control, break down tasks clearly, and double-check everything.

Some limitations felt more fundamental — unlikely to improve much in the short term:

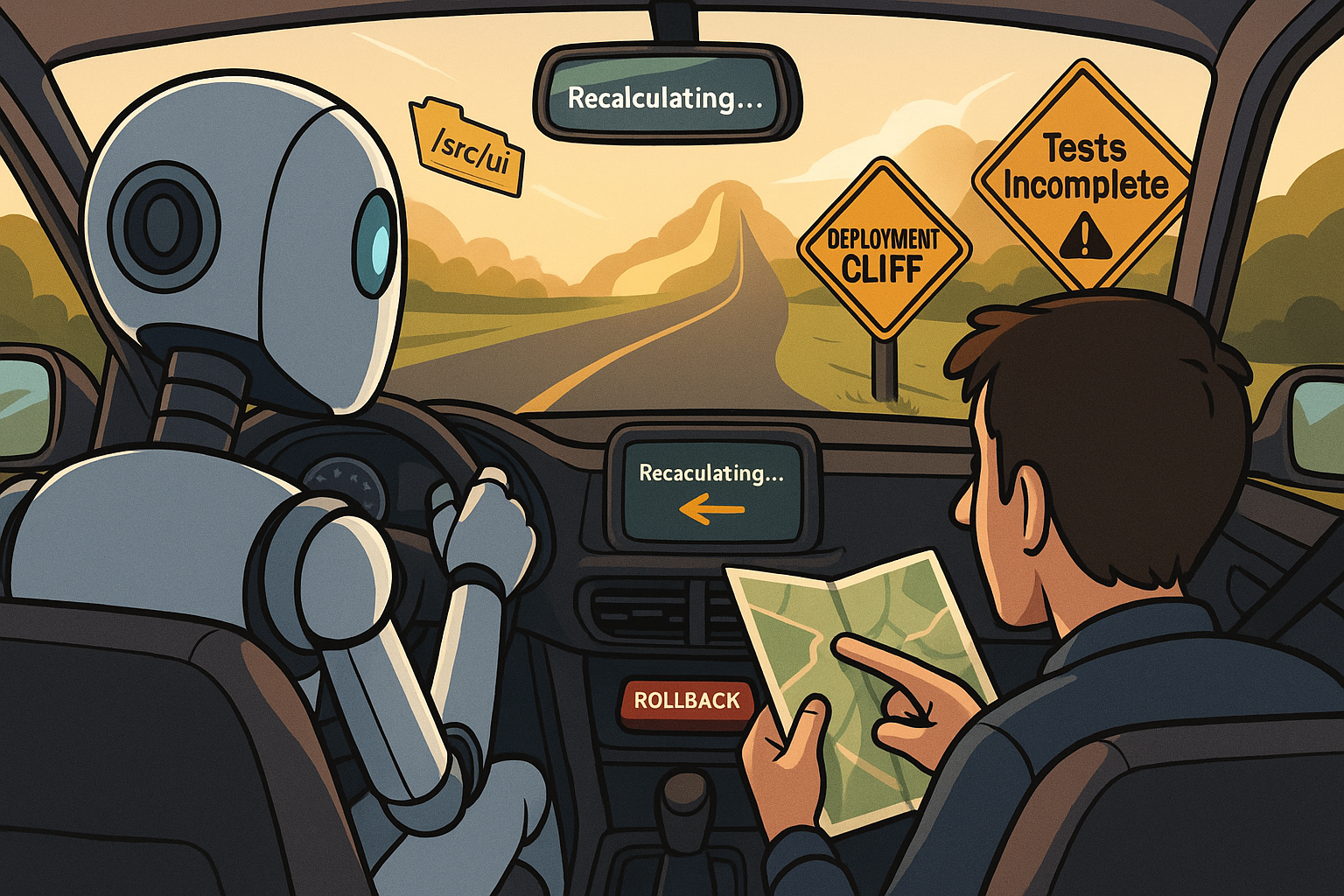

Working with CursorAI was like managing a junior developer. To get good results, I had to:

It reminded me of mentoring an enthusiastic junior: if you explain clearly and review thoroughly, you’ll get decent results. If not, you’ll end up cleaning up the mess.

But in the end, I got a fully functional web app, built with a tech stack I was rusty with, in around 24 hours of total work.

I’ll continue to use Cursor in contexts where speed and flexibility matter more than polish:

✅ Rapid prototyping — quickly validate startup ideas using familiar, well-supported tech

✅ Front-end development — especially when functionality matters more than pixel-perfect design

✅ Agent “ask” mode — to explore unfamiliar parts of the codebase with better context than a standalone LLM

I don’t think so.

🙅♂️ I wouldn’t use the AI agent for enterprise-grade code, where I’d expect review time to outweigh any gains

🙅♂️ I wouldn’t rely on it for maintainable, secure, or performance-critical systems

🙅♂️ I wouldn’t trust it with large-scale refactors that span many files and dependencies

🙅♂️ And generally, I don’t see the benefit of using it for tasks where I’m already familiar with the technology. In many cases, writing a precise instruction, then reviewing and debugging the result, takes just as much time — if not more — than coding it myself.

After all, programming languages exist to express precise instructions to machines. Natural language is more flexible — but also vague and open to interpretation, which makes misunderstandings more likely.

“Computers are good at following instructions, but not at reading your mind.” — Donald Knuth

That said, for early-stage ideas and unfamiliar areas — like front-end stacks I don’t master — it’s a useful assistant. It helps me move fast without getting blocked.

Based on my experience, here’s what helped:

✅ Use a spec file that defines architecture and goals to ramp up the project

✅ Use story-like prompts that reference real files or components

✅ Be specific — “Fix X in file Y. Do not touch Z” works far better than “Clean this up”

✅ Avoid vague verbs like “refactor,” “fix,” or “clean” unless tightly scoped

✅ Always define scope and constraints up front — say what to change, and what not to touch

✅ Break down complex tasks into atomic steps — especially those involving UI + API + DB

✅ Treat Cursor like a QA team-mate would— give step-by-step feedback: “I did X, expected Y, but saw Z”

✅ Don’t trust autosuggestions blindly — always review the diff before accepting

✅ Commit often so you can rollback easily if something breaks

✅ Use a separate branch or feature flag when testing larger changes

✅ Use ChatGPT or prompt engineering tools to refine instructions before sending them to Cursor

✅ Avoid relying on test generation unless starting with TDD in a small, focused scope

This project helped me understand AI coding assistants for what they really are:

Not geniuses — but tireless juniors. Fast, curious, eager to help… but often missing the bigger picture.

Would I recommend Cursor? Absolutely — for indie developers, startup prototypes, or exploring unfamiliar stacks.

But would I let it commit directly to production?

Not yet.

Tried something similar? Share your setup, what worked for you, or where it fell short.

Your writing is a reminder that the simplest words can sometimes hold the most profound truths.